Populating our Virtual Private Cloud

In the previous post we successfully created our Virtual Private Cloud (VPC) in AWS via infrastructure as code utilizing Terraform, which provided us the ability to stand up and tear down our infrastructure landing pad on demand. Now that our landing pad is complete and can be deployed at any time, let's build our fleet of load balanced web servers.

Building the Fleet Using Terraform Modules

Taking a similar approach to our VPC build out we will once again utilize Terraform modules, this time to create and build out our web server fleet. In addition to the Terraform Module Registry there are a number of different sources from which to select ready built modules - including GitHub. For our web server cluster we will utilize a short and simple webserver_cluster module that I have made available in my GitHub terraform repository.

This module creates a basic web server cluster which leverages an AWS launch configuration and auto scaling group to spin up the EC2 instances that will be perfuming as web servers. It also places a load balancer in front of these servers which balances traffic amongst them and performs health checks to be sure the fleet is bullet proof. The module also configures the necessary security groups to allow http traffic inbound. All we need to do is to specify the size and number of the web servers and where to land them.

To call this module we simply need to append to the main.tf file to call the webserver_cluster module and specify how our web server fleet should be built.

| module "webserver_cluster" { | |

| source = "github.com/gmaentz/terraform/modules/services/webserver-cluster" | |

| cluster_name = "webserver-dev" | |

| ami = "ami-a9d09ed1" | |

| key_name = "MyOregonSSH" | |

| instance_type = "t2.micro" | |

| min_size = 2 | |

| max_size = 2 | |

| vpc_id = "${module.vpc.vpc_id}" | |

| subnet_ids = ["${module.vpc.public_subnets}"] | |

| } |

In the code statement above we simply call out the source of our webserver_cluster module which resides in GitHub, specify a name for our cluster, the image and size server to use, a key name should we need to connect to an instance, the minimum and maximum number of servers to deploy, along with the VPC and subnets to place them in (referenced from our VPC build out).

In this case we are going to deploy two web servers to the public subnets we built in our VPC.

Deploying the Fleet

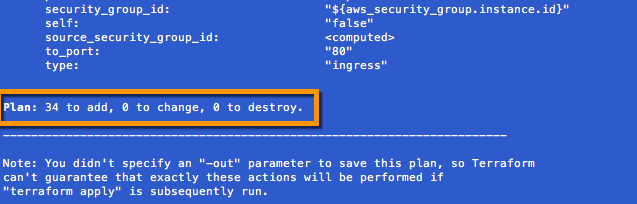

After updating our main.tf file with the code segment above, let's now initialize and test the deployment of our web servers. Since we are adding a new module plan we must rerun our terraform init command to load the module. We can then execute a terraform plan for validation and finally terraform apply to deploy our fleet of web servers to the public subnets of or VPC residing in AWS us-west-2.

Validate the Plan and Deploy using terraform plan and terraform apply.

Accessing the Fleet

So our deployment is complete, but how can we access it? When building infrastructure, Terraform stores hundreds of attribute values for all of our resources. We are often only interested in just a few of these resource, like the DNS name of our load balancer to access the website. Outputs are used to identify and tell Terraform what data is important to show back to the user.

Outputs are stored as variables and it is considered best practice to organize them in a separate file within our repository. We will create a new file called outputs.tf in the same directory as our main.tf file and specify the key pieces of information about our fleet, including: DNS name of the load balancer, private subnets, public subnets, NAT IPs, etc.

| # VPC | |

| output "vpc_id" { | |

| description = "The ID of the VPC" | |

| value = "${module.vpc.vpc_id}" | |

| } | |

| # Subnets | |

| output "private_subnets" { | |

| description = "List of IDs of private subnets" | |

| value = ["${module.vpc.private_subnets}"] | |

| } | |

| output "public_subnets" { | |

| description = "List of IDs of public subnets" | |

| value = ["${module.vpc.public_subnets}"] | |

| } | |

| # NAT gateways | |

| output "nat_public_ips" { | |

| description = "List of public Elastic IPs created for AWS NAT Gateway" | |

| value = ["${module.vpc.nat_public_ips}"] | |

| } | |

| output "elb_dns_name" { | |

| value = "${module.webserver_cluster.elb_dns_name}" | |

| } | |

| output "asg_name" { | |

| value = "${module.webserver_cluster.asg_name}" | |

| } | |

| output "elb_security_group_id" { | |

| value = "${module.webserver_cluster.elb_security_group_id}" | |

| } |

After creating and saving the outputs.tf file, we can issue a terraform refresh against our deployed environment to refresh its state and see the outputs. We could have also issued a terraform output to see these values, and they will be displayed the next time terraform apply is executed.

Browsing to the value contained in our elb_dns_name output, we see our website. Success.

Scaling the Fleet

So now that our fleet is deployed, let's scale it. This is a very simple operation requiring just a small adjustment to the min and max size setting within the webserver_cluster module. We will adjust two lines in main.tf and rerun our plan/deployment.

.... min_size = 8 max_size = 10 .... Viola. Our web server fleet now has been scaled up with an in place update that has no service disruption. This showcases the power of infrastructure as code and AWS auto-scaling groups.

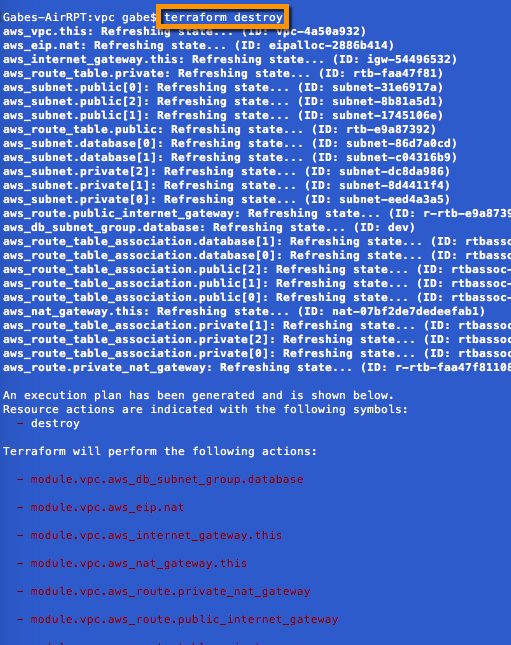

Scaling back our fleet, as well as clean up is equally as easy. Simply issue a terraform destroy to minimize AWS spend and wipe our slate clean.

Multi-Region/Multi-Enviornment Deployment

Now that we have an easy way to deploy and scale our fleet, the next step is to put our re-usable code to work to build out our development, staging and production environments across AWS regions.

Terraform Series

This is part of a Terraform series in which we cover: