Let's explore a script that leverages DataGravity's file fingerprints to identify the top 10 duplicate files on a given department share or virtual machine.

The Workflow

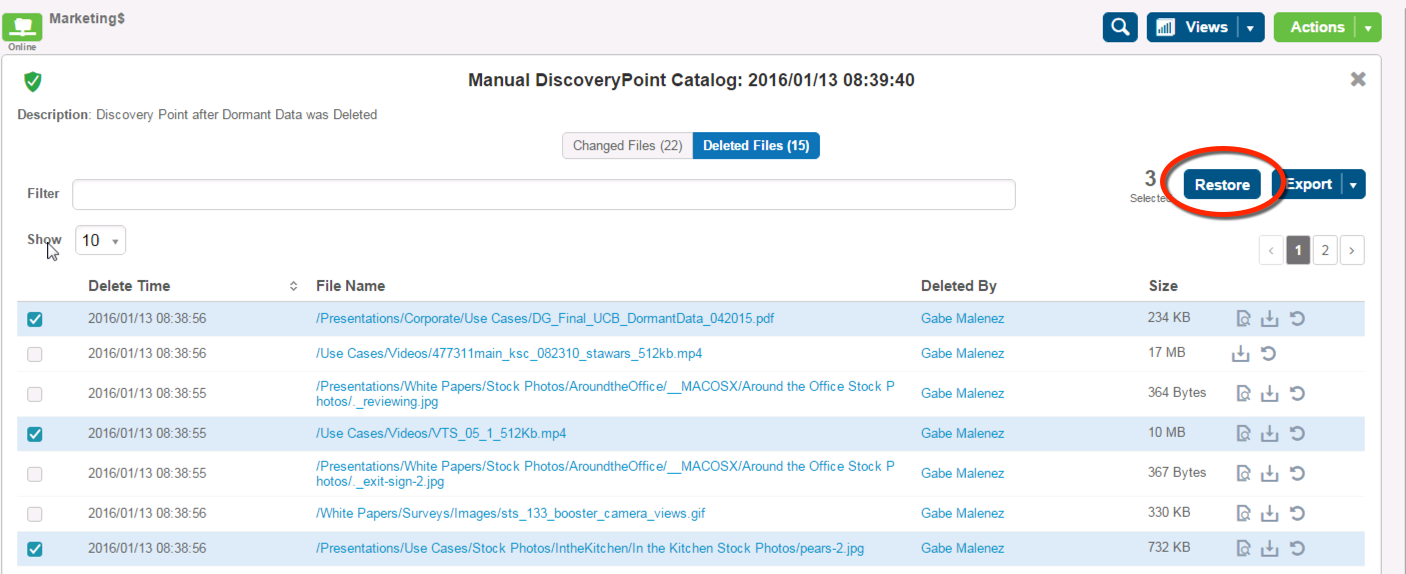

- Export fingerprints and file names to File List (CSV format)

- Run the FindDuplicateFiles.ps1 powershell script

- List the Top 10 duplicate files and space they are consuming

Files and Fingerprints

DataGravity makes it easy to identify files and their unique SHA-1 fingerprints on a share or virtual machine (VMware or Hyper-V). In this example we are going to gather the file names and fingerprints in the Sales department share.

The Script:

FindDuplicateFiles.ps1 -csvFilePath "c:\temp\sales.csv" -top 10

Script parameters:

-csvFilePath is the path to the CSV file we downloaded in the first step which contains a list of the files and file fingerprints. This is an export from DataGravity's Search.

-top optional parameter that if specified will show the top number of duplicate files

Listing and Validating Duplicates

Let's run the script to return the top 10 duplicate files, and their file size.

These can of course be validated as the example below returns duplicate files consuming the most space.

The full powershell script is listed below, and available on my Powershell repo on GitHub.

| ######################################### | |

| ## DataGravity Inc. | |

| ## January 2016 | |

| ## Find number of duplicate files and size from exported CSV file | |

| ## Tested with DataGravity Software v2.2 | |

| ## Free to distribute and modify | |

| ## THIS SCRIPT IS PROVIDED WITHOUT WARRANTY, ALWAYS FULLY BACK UP DATA BEFORE INVOKING ANY SCRIPT | |

| ## ALWAYS VERIFY NO BLANK ROWS IN BETWEEN DATA IN CSV | |

| ########################################## | |

| ########################################## | |

| ## Instructions: | |

| ## 1) Use DataGravity UI to filter by files, dormant data, etc | |

| ## 2) Export CSV file | |

| ## 3) Modify script paths | |

| ## 4) Run script | |

| ## | |

| ## Ex. FindDuplicateFiles.ps1 -csvFilePath "c:\temp\sales.csv" -top 10 | |

| ## | |

| ########################################## | |

| ##---------------------------------------- | |

| ## Input Paramaters | |

| ##---------------------------------------- | |

| param ( | |

| [Parameter(Mandatory=$true)] | |

| [string]$csvFilePath, | |

| [int]$top | |

| ) | |

| ## If top number is not specified then return all values | |

| if($top -eq 0) | |

| { | |

| Import-Csv $csvFilePath | Group-Object -Property fingerprint -NoElement | | |

| Sort-Object count -Descending | | |

| Format-Table -AutoSize | |

| } | |

| else | |

| { | |

| Import-Csv $csvFilePath | Group-Object -Property fingerprint,size | | |

| Where-Object{$_.Values[0] -ne ""} | | |

| Sort-Object -Property {$_.Count} -Descending | | |

| Select-Object -First $top | | |

| Format-Table Count, | |

| @{Label="Data FingerPrint";Expression={$_.Values[0]}}, | |

| @{Label="File Size (KB)";Expression={($_.Values[1]) / 1Kb}} -Autosize | |

| } |