My last blog article received some great attention, and highlights the growing demand for understanding and determining where sensitive data lives on corporate endpoints. That post walked through the steps taken with a great free product by Veeam Software called Veeam Endpoint Protection, and coupled it with the power of DataGravity's File Analytics on a simple SMB share.

In fact the popularity of the article spurred a question at a local VMUG in which it was asked if DataGravity might be able to provide the same level of insights to to replicated VMs sitting at a DR location. It just so happens that this customer is already using Veeam Backup and Replication to backup and replicate VMs to a DR environment every night - ready to failover, should the need arise. The customer asked 'if rather then simply replicating these VMs to an otherwise unintelligent data repository, could I replicate to a DataGravity datastore at my DR location to make use of the VM File Analytics?' This is a great use case and the answer is YES.

You may be asking - Replicated VMs - doesn't DataGravity have to act as your primary storage? Well in fact DataGravity is enterprise primary storage but there is nothing that prohibits it from also serving as a great Backup/Replication target. In fact DataGravity by design, natively performs it's analytics on VMDK files residing on NFS datastores regardless if those datastores are serving in a primary storage capacity or as a replication target; regardless of the VM's power state. This means VMs can be replicated, remain powered off at the DR location and non-intrusively be analyzed for sensitive information. Very powerful. Let's see how to do it:

Create Target VM Datastore for Replica VMs

The first step would be to create a VM datastore which will be used as a replication target. We will call it 'DataMRI' - because we plan on taking a deeper look into the health of our replicated VMs, so the name fits.

This is a simple four step process, which the DataGravity 'Create Datastore' setup will walk you through.

- Specify a name and and size for the datastore

- Specify which ESXi hosts the datastore should be attached to

- Specify the Discovery Point Policy - this is the frequency in which the Data Analytics will be run on the replicated VMs.

- Validate the datastore is attached to your ESXi hosts at the DR location. You can see that the datastore is online and ready to receive VMs.

Configure Veeam replication Job

Since this customer is already using Veeam Replication it is very simple to modify or create a new replication job that specifies the newly created datastore (DataMRI) at the DR location as the replication target. As I have indicated in previous articles, I like the simplicity and flexibility that Veeam provides for my backups, and their replication engine serves very well in a modern data protection architecture. It offers image-based VM replicas, built in WAN Acceleration, and the ability to failover and failback to/from those replicas.

To create a Replication Job, log into Veeam and step through the New Replication Job setup.

- Create a New VMware Replication Job

- Specify a name for the Replication Job

- Specify the VMs to include for replication.

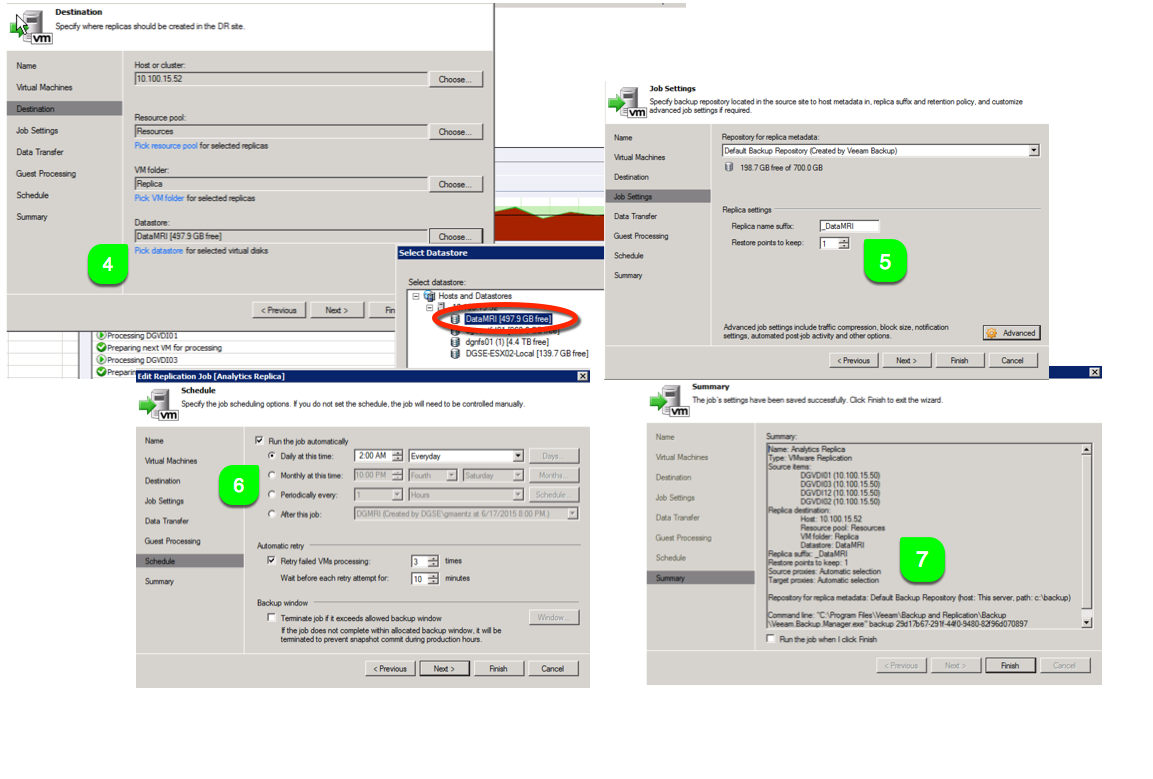

4. Specify the Destination to replicate to: Host, Resource Pool, Folder, and Datastore (DataMRI) that was created in 'Create Target DataStore' above.

5. I like to append a suffix to my replicated VMs so I know that these are replicated, so I appreciate this option in Veeam. I used the suffix _DataMRI, and I choose to only keep 1 restore point.

6. Schedule the time for the replication to occur - I choose every night at 2:00 AM.

7. Review the summary details and save the job.

Replicate VMs

Now that we have the target datastore defined for our VM replicas, and the replication job in place.....let's replicate. The timing of the replication will of course follow the schedule you specified above in the Veeam Replication job and below you can see that we are able to highlight the status of the job on a per-VM basis.

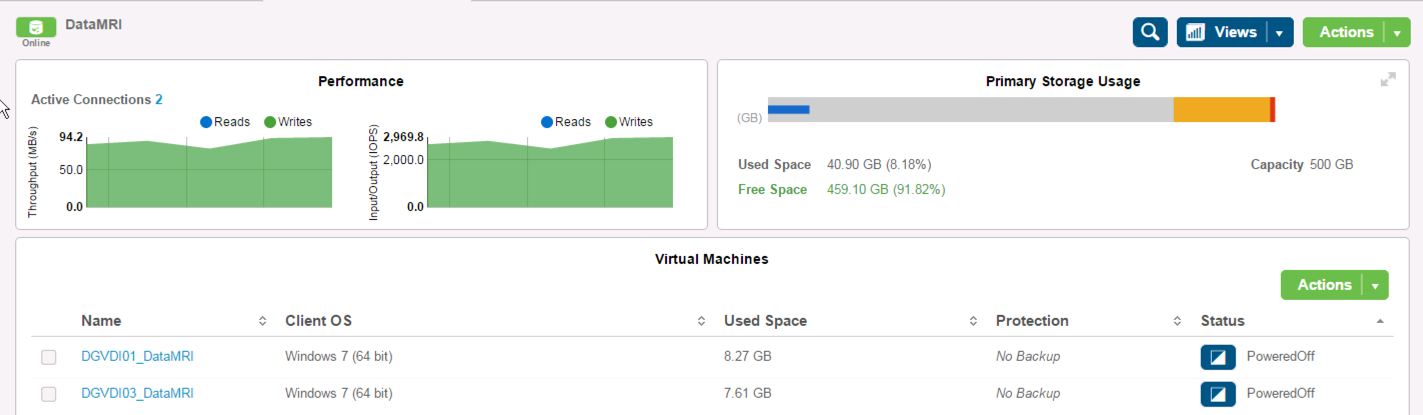

We can also see the real time performance on the target DataGravity datastore, as well as the status of the VM replicas which are starting to populate the target.

Now that we have VMs starting to come over to the target DataGravity datastore, let's take a look at the Analytics and information that these VMs are holding.

VM Analytics

To begin, let's take a look at the File Analytics view from within DataGravity. This view allows us to search and uncover all the details for our replicated VMs and the data which they contain.

Looking at one of the replicated VMs - DGVDI01_DataMRI, we can start to see some critical information. We can see the Top Users of the VM, the Most Active Users on that VM, Dormant Data and File Growth over time. Additionally we can see that the VM contains a number of files with Social Security and Credit Card numbers. 15 files with Social Security Numbers on this VM.....let's dig into that.

The impressive thing is that the replicated VM doesn't need to be powered on at all to see this level of detail, so it doesn't disrupt the data protection architecture or DR procedure.

Further detail on the makeup of these files, as well as a full listing is only a click away. Here is a list of the 15 files stored on this VM that contain Social Security numbers. This is making tremendous use of our VM Replicas.

Analyze VMS and find Sensitive Data with Zero Impact

Replication can now serve more then just as a safety net. Why not include the power of Veeam Replication with DataGravity Analytics inside you backup and DR strategy - and not only be ready to failover in the event of service disruption, but also be informed of how that data is growing, as well as what sensitive information is being saved within the infrastructure.

Much thanks to my customer base for presenting such a great use case, and allowing me to share.